Entrepreneurs of Insight

DataJoint and the Future of Knowledge Creation

The world of scientific discovery is in flux, undergoing a transformation that redefines not only how research is conducted but also how its intellectual fruits are managed on a global scale. To understand the current juncture, it's insightful to consider the historical trajectory of basic research across leading scientific nations.

Before World War II, while universities globally, particularly in Europe, possessed strong traditions of fundamental inquiry, a significant portion of pioneering fundamental science also emerged from industrial laboratories in technologically advancing countries. In the United States, for instance, Bell Labs, an industrial research powerhouse, was the birthplace of the transistor in 1947—a discovery that, while occurring just post-war, was the fruit of pre-war and wartime research trajectories typical of an era where industry played a leading role in certain types of foundational science. Similarly, DuPont's development of nylon in the 1930s, a groundbreaking synthetic material, stemmed from its corporate commitment to long-term chemical research. These examples illustrate an era where industrial investment was critical for certain capital-intensive, foundational breakthroughs, often in fields aligned with their commercial interests, yet profoundly expanding scientific frontiers.

The landscape experienced a seismic shift following World War II, with different nations adapting their science policies. In the United States, this was famously catalyzed by influential reports like Vannevar Bush’s 1945 "Science, the Endless Frontier." This advocated for massive, sustained federal investment in basic research, primarily through universities, as an engine for national progress. The subsequent establishment of agencies like the National Science Foundation (NSF) and the expansion of the National Institutes of Health (NIH) exemplified this strategy. This model, emphasizing publicly funded, university-based research, led to an explosion of academic discoveries, such as the elucidation of the DNA double helix structure in 1953 by Watson and Crick (supported by a mix of academic, philanthropic, and Medical Research Council funding in the UK), or the development of the laser and maser by Charles Townes and others (with university research often backed by government contracts). Other leading nations also significantly increased state funding for science, developing their own models that shaped the global scientific ecosystem and intensified both international collaboration and competition.

As a result, universities and dedicated public research institutions worldwide became principal theaters for early-stage investigation. While leading corporations globally didn't entirely abandon basic research—many maintained formidable research divisions pursuing strategic long-term goals—their relative proportion of national basic research efforts often changed. The focus of major public investment shifted towards academia for broad, non-directed inquiry.

Today, academic and public research institutions remain the cornerstones of basic research. However, this model is profoundly tested. Public funding, while substantial, often struggles to keep pace with the escalating costs, complexity, and sheer scale required for cutting-edge science. A stark illustration of this trend is the development of modern large AI models. The foundational algorithmic breakthroughs and initial advancements in deep learning often emerged from academic settings, driven by researchers like Geoffrey Hinton, Yann LeCun, and Yoshua Bengio. However, the journey to the current state of powerful large language and generative AI models required computational resources, engineering teams, and datasets at a scale far beyond typical academic grant capabilities. It took trillion-dollar valuations driven by venture backing and massive corporate investment to achieve this, demonstrating that some areas of what is effectively basic research now demand financial and infrastructural scale previously unimagined for academic settings.

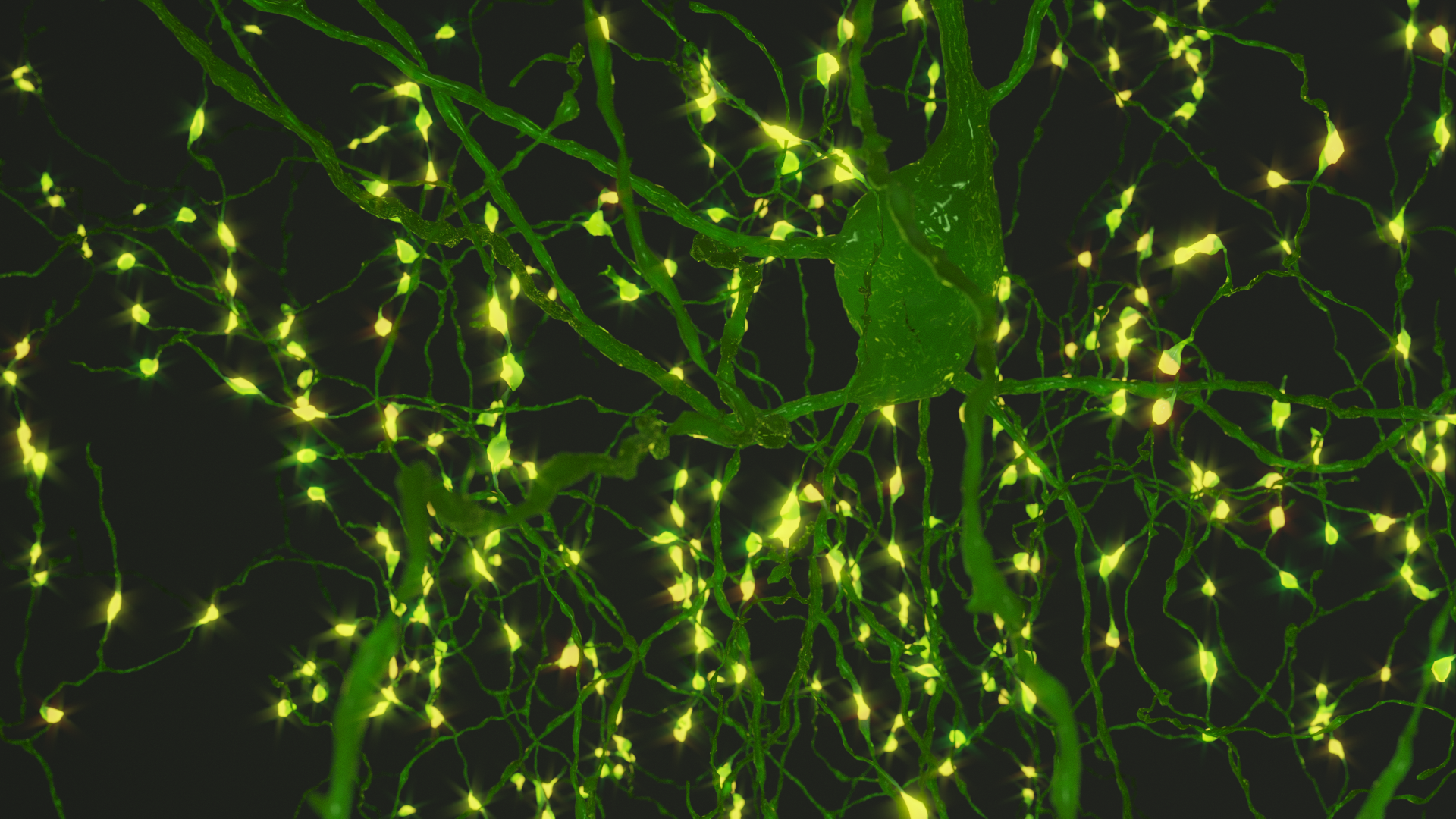

This challenge is not unique to AI. For instance, discussions at pivotal meetings, such as the November 2024 NIH NeuroAI Workshop, which I had the privilege to join, have highlighted how advancing neuroscience to understand the brain's immense complexity requires scalability in data acquisition, processing, and model-building not unlike that seen in leading AI companies. Participants noted that the traditional federal grant system is often poorly adapted to support the massive, collaborative, and computationally intensive joint studies of neuroscience and AI needed for transformative breakthroughs.

This evolving global context necessitates a "new knowledge capitalism." Basic science will likely continue to germinate primarily within academic labs, but their modes of integration with industry and, critically, their knowledge management approaches, must evolve. This new model involves recognizing and strategically managing all outputs of research—not just publications, but the intricate scientific workflows and the vast, curated acquired data—as valuable research assets.

This upcoming transformation demands deliberate focus on knowledge ownership, licensing, and comprehensive data governance. As research becomes more collaborative and its outputs more complex and valuable, clarity on these issues is paramount. Academic institutions, departments, individual labs, and the researchers themselves will require and expect clear definitions of their respective ownership shares and usage rights for both intellectual property (methods, software, inventions) and the data assets generated. Robust data governance frameworks covering both the data itself (its quality, security, accessibility, and provenance) and the methods used to produce and analyze it will become central to the scientific enterprise.

Research Communications in the New Knowledge Capitalism

The imperatives of this new scientific enterprise also reshape our approach to research communication. It's crucial to distinguish that the ideal methods for conducting science often differ significantly from those for effectively communicating science. The process of discovery is frequently complex, iterative, involving vast amounts of data, numerous analytical paths explored, and many supporting details. In contrast, research communication—be it in publications, presentations, or public discourse—thrives on simplicity, a clear focus on the main findings, and meticulously presented controls that substantiate these claims. Extraneous detail, while vital during the research process, can detract from the core message in its communication.

The primary purpose of research communication is didactic; it aims to inform, educate, and allow the broader scientific community and society to understand and build upon new knowledge. Paramount to this is scientific integrity. Findings must be presented transparently and honestly, allowing for scrutiny and verification.

This is where a platform like DataJoint plays a dual role. While it manages the full complexity of research conducted by teams—the intricate pipelines, diverse datasets, and varied computational explorations—it also provides the tools to support clear and responsible communication. DataJoint allows researchers to precisely extract specific cross-sections of data, analyses, and workflow components that directly underpin the scientific findings being communicated. This enables the creation of focused narratives supported by verifiable evidence.

However, this capability must be wielded with profound scientific caution. The ability to select specific data or analytical approaches for presentation carries an inherent risk of bias, such as p-hacking (selectively reporting statistically significant results while ignoring non-significant ones) or cherry-picking data or methods that inappropriately inflate findings. In the new knowledge capitalism, where the perceived value of research assets is critical, maintaining the highest standards of integrity in communication is more important than ever.

DataJoint can contribute to mitigating these risks, not by policing thought, but by enabling more transparent and accountable practices:

- Traceability: Communicated findings can be directly traced back to their origins within the managed DataJoint pipeline, including specific data versions and computational steps.

- Contextual Record: The full pipeline, potentially encompassing a broader range of analyses than what is presented in a concise communication, can serve as a comprehensive record. While not always fully public due to IP considerations, this underlying structure can support deeper dives by collaborators, reviewers (under appropriate agreements), or for internal validation.

- Support for Rigor: By structuring the research process, DataJoint encourages systematic exploration and documentation, which, when combined with ethical research practices and pre-registration of analysis plans where appropriate, can help ensure that communicated results are robust and represent the science faithfully.

Ultimately, while tools like DataJoint provide powerful capabilities for both conducting and communicating science, the responsibility for ethical conduct and transparent reporting rests with the researchers and the scientific community. In this new era, where scientific assets have explicit value, ensuring that communications are both compelling and rigorously honest is fundamental to building trust and fostering genuine progress.

DataJoint: Enabling the Transformation

DataJoint is at the forefront of this evolution, providing the framework for this new global scientific enterprise. We understand that for research entities to thrive, they need tools that facilitate both robust international collaboration and stringent protection and management of their unique assets:

- Develop, Extend, and Preserve Research Workflows and Data Assets with Secure IP Retention: DataJoint provides a robust framework for creating, managing, and evolving complex data pipelines, treating both the workflow logic and the acquired data as first-class, interlinked assets. This systematic approach inherently documents the research process. DataJoint’s architecture enables granular control, allowing labs to define what is shared and with whom—whether collaborators are domestic or international—ensuring core intellectual property and sensitive data remain secure and properly attributed.

- Achieve Seamless Compatibility with Global Partners (Data Ops, Research Ops, SciOps): DataJoint's standardized data models and reproducible analyses align with rigorous operational demands worldwide, facilitating smoother international collaborations.

- Champion Reproducible Science While Strategically Managing Know-How and Data Assets: DataJoint enables transparency for validation while empowering institutions and researchers to protect core methods and valuable datasets, balancing openness with the strategic management of assets in a competitive global environment.

- Manage Knowledge Assets and Uphold FAIR Principles with Comprehensive Governance: DataJoint supports FAIR principles for both workflows and data. Crucially, it provides the infrastructure for implementing detailed data governance and defining knowledge ownership structures. Its relational database foundation and workflow management capabilities allow for precise tracking of contributions, data provenance, and processing steps, which is essential for delineating ownership and licensing rights among institutions, departments, labs, and individual researchers. This facilitates the clear articulation and enforcement of policies regarding data and IP sharing, usage, and commercialization.

Empowering the Future: International Collaboration, Governance, Security, and Global Competitiveness

The current scientific era is defined by unprecedented opportunities for international collaboration to tackle grand challenges. DataJoint provides a common operational language and robust data management infrastructure that allows geographically dispersed teams to work together seamlessly, share insights responsibly, and accelerate discovery.

However, this occurs within a landscape of intense global competition. Nations and institutions vie for scientific leadership. DataJoint supports this by enabling rigorous data governance and IP management that helps protect a research entity’s competitive edge while still allowing for controlled collaboration. Its capabilities are crucial for implementing clear knowledge ownership and licensing frameworks, ensuring that contributions are properly recognized and benefits fairly distributed according to predefined agreements between universities, labs, and researchers.

DataJoint is engineered to provide fine-grained access controls, auditable data and computation trails, and a secure environment. This allows entities to collaborate confidently, sharing necessary components of data and workflows while protecting proprietary elements critical to their strategic interests.

The future of science is global, collaborative, and competitive, with an increasing emphasis on the strategic management of knowledge and data assets, and a renewed focus on the integrity of its communication. DataJoint is committed to empowering researchers and institutions worldwide to navigate this new landscape successfully, providing the tools to conduct world-class science, strategically manage their intellectual assets with clear governance and ownership structures, and forge a more impactful, secure, and sustainable scientific future for all.

Related posts

A Better Data Engine for Brain Science

Data needs direction: five clarifications for database design

Optional dependencies

Updates Delivered *Straight to Your Inbox*

Join the mailing list for industry insights, company news, and product updates delivered monthly.

.svg)